Inspecting Individual Dakota Evaluations

After your Dakota run has finished, you may wish to inspect the results of each individual evaluation before doing anything with the results data. This is especially true if you are working with a finnicky analysis driver, and the possibility exists that one or more evaluations might fail and need to be re-run.

A quick detour to talk about lazy drivers (and why you should use them)

The Dakota GUI provides a special type of analysis driver called a lazy driver, which knows how to decouple each individual evaluation run from Dakota, and yet enable Dakota to resume at a later time to collect results. You can read more about lazy drivers and how to generate one on the New Lazy Driver wizard tutorial page.

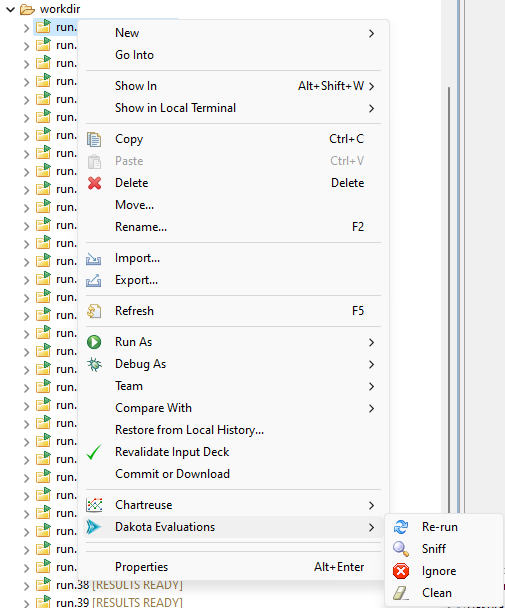

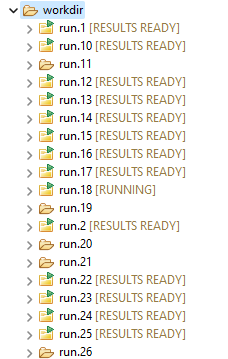

Shown below is a Dakota working directory midway through generating evaluation results:

Without a lazy driver, you will not see the folder decorations or the bracketed text to the right of each folder, indicating each status.

While Dakota is running, you will see one of the following statuses next to each evaluation directory:

[RUNNING] : Indicates that the driver is still running in this evaluation directory

[RESULTS READY] : Indicates that the driver has finished, but Dakota needs to be started a second time in order to collect the results out of each directory

[SUCCESSFUL] : Indicates that the driver has finished and Dakota has collected the results

[FAILED] : Indicates that the driver failed to generate results

[IGNORED] : Indicates that the user has manually elected to ignore this evaluation directory (see below for more information)

The typical workflow for a Dakota study with a lazy driver is as follows:

Run Dakota using a standard run configurations.

Wait for all evaluation results in the working directory to reach a status of [RESULTS READY].

Run Dakota a second time, using the same run configuration.

Wait for all evaluation results in the working directory to reach a status of [SUCCESSFUL]. This indicates that the results have been collected by Dakota and added to its tabular data file and/or its HDF5 results file.