Uncertainty Quantification

Overview

At a high level, uncertainty quantification (UQ) or nondeterministic analysis is the process of (1) characterizing input uncertainties, (2) forward propagating these uncertainties through a computational model, and (3) performing statistical or interval assessments on the resulting responses. This process determines the effect of uncertainties and assumptions on model outputs or results. In Dakota, uncertainty quantification methods primarily focus on the forward propagation and analysis parts of the process (2 and 3), where probabilistic or interval information on parametric inputs are mapped through the computational model to assess statistics or intervals on outputs. For an overview of these approaches for engineering applications, consult [HM00]. Dakota also has emerging methods for inference or inverse UQ, such as Bayesian calibration. These methods help with (1) by inferring a statistical characterization of input parameters that is consistent with available observational data.

UQ is related to sensitivity analysis in that the common goal is to gain an understanding of how variations in the parameters affect the response functions of the engineering design problem. However, for UQ, some or all of the components of the parameter vector are considered to be uncertain as specified by particular probability distributions (e.g., normal, exponential, extreme value) or other uncertainty specifications. By assigning specific distributional structure to the inputs, distributional structure for the outputs (i.e, response statistics) can be inferred. This migrates from an analysis that is more qualitative in nature, in the case of sensitivity analysis, to an analysis that is more rigorously quantitative.

UQ methods can be distinguished by their ability to propagate aleatory or epistemic input uncertainty characterizations, where aleatory uncertainties are irreducible variabilities inherent in nature and epistemic uncertainties are reducible uncertainties resulting from a lack of knowledge.

For aleatory uncertainties, probabilistic methods are commonly used for computing response distribution statistics based on input probability distribution specifications. Conversely, for epistemic uncertainties, use of probability distributions is based on subjective prior knowledge rather than objective data, and we may alternatively explore nonprobabilistic methods based on interval specifications.

Summary of Dakota UQ Methods

Dakota contains capabilities for performing nondeterministic analysis with both types of input uncertainty. These UQ methods have been developed by Sandia Labs, in conjunction with collaborators in academia [EAP+07, GRH99, GS91, TSE10].

The aleatory UQ methods in Dakota include various sampling-based approaches (e.g., Monte Carlo and Latin Hypercube sampling), local and global reliability methods, and stochastic expansion (polynomial chaos expansions, stochastic collocation, and functional tensor train) approaches. The epistemic UQ methods include local and global interval analysis and Dempster-Shafer evidence theory. These are summarized below and then described in more depth in subsequent sections of this chapter. Dakota additionally supports mixed aleatory/epistemic UQ via interval-valued probability, second-order probability, and Dempster-Shafer theory of evidence. These involve advanced model recursions and are described in Mixed Aleatory-Epistemic UQ.

LHS (Latin Hypercube Sampling): This package provides both Monte Carlo (random) sampling and Latin Hypercube sampling methods, which can be used with probabilistic variables in Dakota that have the following distributions: normal, lognormal, uniform, loguniform, triangular, exponential, beta, gamma, gumbel, frechet, weibull, poisson, binomial, negative binomial, geometric, hypergeometric, and user-supplied histograms. In addition, LHS accounts for correlations among the variables [IS84], which can be used to accommodate a user-supplied correlation matrix or to minimize correlation when a correlation matrix is not supplied. In addition to a standard sampling study, we support the capability to perform “incremental” LHS, where a user can specify an initial LHS study of N samples, and then re-run an additional incremental study which will double the number of samples (to 2N, with the first N being carried from the initial study). The full incremental sample of size 2N is also a Latin Hypercube, with proper stratification and correlation. Statistics for each increment are reported separately at the end of the study.

Reliability Methods: This suite of methods includes both local and global reliability methods. Local methods include first- and second-order versions of the Mean Value method (MVFOSM and MVSOSM) and a variety of most probable point (MPP) search methods, including the Advanced Mean Value method (AMV and AMV\(^2\)), the iterated Advanced Mean Value method (AMV+ and AMV\(^2\)+), the Two-point Adaptive Nonlinearity Approximation method (TANA-3), and the traditional First Order and Second Order Reliability Methods (FORM and SORM) [HM00]. The MPP search methods may be used in forward (Reliability Index Approach (RIA)) or inverse (Performance Measure Approach (PMA)) modes, as dictated by the type of level mappings. Each of the MPP search techniques solve local optimization problems in order to locate the MPP, which is then used as the point about which approximate probabilities are integrated (using first- or second-order integrations in combination with refinements based on importance sampling). Global reliability methods are designed to handle nonsmooth and multimodal failure surfaces, by creating global approximations based on Gaussian process models. They accurately resolve a particular contour of a response function and then estimate probabilities using multimodal adaptive importance sampling.

Stochastic Expansion Methods: Theoretical development of these techniques mirrors that of deterministic finite element analysis utilizing the notions of projection, orthogonality, and weak convergence [GRH99], [GS91].

Rather than focusing on estimating specific statistics (e.g., failure probability), they form an approximation to the functional relationship between response functions and their random inputs, which provides a more complete uncertainty representation for use in more advanced contexts, such as coupled multi-code simulations. Expansion methods include polynomial chaos expansions (PCE), which expand in a basis of multivariate orthogonal polynomials (e.g., Hermite, Legendre) that are tailored to representing particular input probability distributions (e.g., normal, uniform); stochastic collocation (SC), which expand in a basis of multivariate interpolation polynomials (e.g., Lagrange); and functional tensor train (FTT), which leverages concepts from data compression to expand using low rank products of polynomial cores. For PCE, expansion coefficients may be evaluated using a spectral projection approach (based on sampling, tensor-product quadrature, Smolyak sparse grid, or cubature methods for numerical integration) or a regression approach (least squares or compressive sensing). For SC, interpolants are formed over tensor-product or sparse grids and may be local or global, value-based or gradient-enhanced, and nodal or hierarchical. In global value-based cases (Lagrange polynomials), the barycentric formulation is used [BT04, Hig04, Kli05] to improve numerical efficiency and stability. For FTT, regression via regularized nonlinear least squares is employed for recovering low rank coefficients, and cross-validation schemes are available to determine the best rank and polynomial basis order settings. Each of these methods provide analytic response moments and variance-based metrics; however, PDFs and CDF/CCDF mappings are computed numerically by sampling on the expansion.

Importance Sampling: Importance sampling is a method that allows one to estimate statistical quantities such as failure probabilities in a way that is more efficient than Monte Carlo sampling. The core idea in importance sampling is that one generates samples that are preferentially placed in important regions of the space (e.g. in or near the failure region or user-defined region of interest), then appropriately weights the samples to obtain an unbiased estimate of the failure probability.

Adaptive Sampling: The goal in performing adaptive sampling is to construct a surrogate model that can be used as an accurate predictor of an expensive simulation. The aim is to build a surrogate that minimizes the error over the entire domain of interest using as little data as possible from the expensive simulation. The adaptive sampling methods start with an initial LHS sample, and then adaptively choose samples that optimize a particular criteria. For example, if a set of additional possible sample points are generated, one criteria is to pick the next sample point as the point which maximizes the minimum distance to the existing points (maximin). Another criteria is to pick the sample point where the surrogate indicates the most uncertainty in its prediction.

Recently, Dakota added a new method to assess failure probabilities based on ideas from computational geometry. Part of the idea underpinning this method is the idea of throwing “darts” which are higher dimensional objects than sample points (e.g. lines, planes, etc.) The POF (Probability-of-Failure) darts method uses these objects to estimate failure probabilities.

Interval Analysis: Interval analysis is often used to model epistemic uncertainty. In interval analysis, one assumes that nothing is known about an epistemic uncertain variable except that its value lies somewhere within an interval. In this situation, it is NOT assumed that the value has a uniform probability of occurring within the interval. Instead, the interpretation is that any value within the interval is a possible value or a potential realization of that variable. In interval analysis, the uncertainty quantification problem is one of determining the resulting bounds on the output (defining the output interval) given interval bounds on the inputs. Again, any output response that falls within the output interval is a possible output with no frequency information assigned to it.

We have the capability to perform interval analysis using either global or local methods. In the global approach, one uses either a global optimization method (based on a Gaussian process surrogate model) or a sampling method to assess the bounds. The local method uses gradient information in a derivative-based optimization approach, using either SQP (sequential quadratic programming) or a NIP (nonlinear interior point) method to obtain bounds.

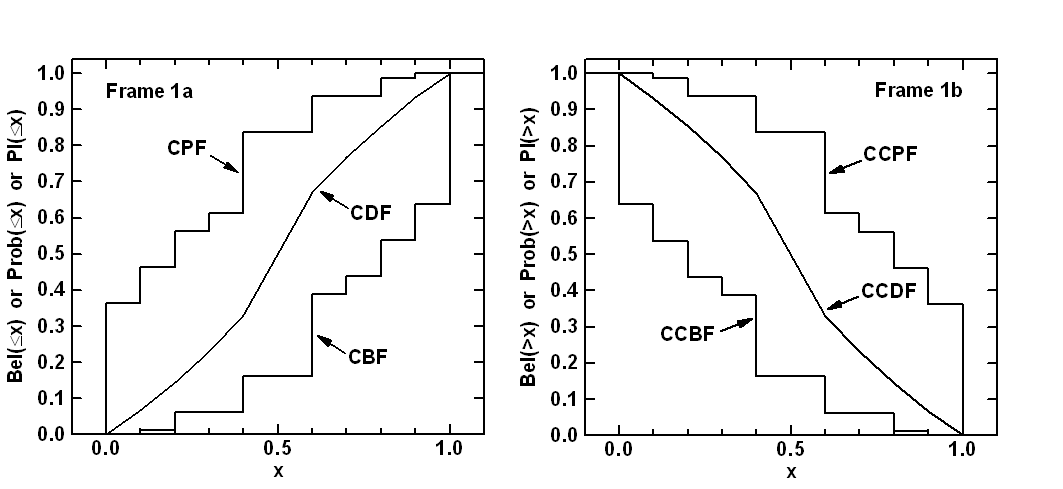

Dempster-Shafer Theory of Evidence: The objective of evidence theory is to model the effects of epistemic uncertainties. Epistemic uncertainty refers to the situation where one does not know enough to specify a probability distribution on a variable. Sometimes epistemic uncertainty is referred to as subjective, reducible, or lack of knowledge uncertainty. In contrast, aleatory uncertainty refers to the situation where one does have enough information to specify a probability distribution. In Dempster-Shafer theory of evidence, the uncertain input variables are modeled as sets of intervals. The user assigns a basic probability assignment (BPA) to each interval, indicating how likely it is that the uncertain input falls within the interval. The intervals may be overlapping, contiguous, or have gaps. The intervals and their associated BPAs are then propagated through the simulation to obtain cumulative distribution functions on belief and plausibility. Belief is the lower bound on a probability estimate that is consistent with the evidence, and plausibility is the upper bound on a probability estimate that is consistent with the evidence. In addition to the full evidence theory structure, we have a simplified capability for users wanting to perform pure interval analysis (e.g. what is the interval on the output given intervals on the input) using either global or local optimization methods. Interval analysis is often used to model epistemic variables in nested analyses, where probability theory is used to model aleatory variables.

Bayesian Calibration: In Bayesian calibration, uncertain input parameters are initially characterized by a “prior” distribution. A Bayesian calibration approach uses experimental data together with a likelihood function, which describes how well a realization of the parameters is supported by the data, to update this prior knowledge. The process yields a posterior distribution of the parameters most consistent with the data, such that running the model at samples from the posterior yields results consistent with the observational data.

Variables and Responses for UQ

UQ methods that perform a forward uncertainty propagation map probability or interval information for input parameters into probability or interval information for output response functions. The \(m\) functions in the Dakota response data set are interpreted as \(m\) general response functions by the Dakota methods (with no specific interpretation of the functions as for optimization and least squares).

Within the variables specification, uncertain variable descriptions are employed to define the random variable distributions (refer to Uncertain Variables). For Bayesian inference methods, these uncertain variable properties characterize the prior distribution to be updated and constrained by the observational data. As enumerated in Uncertain Variables, uncertain variables types are categorized as either aleatory or epistemic and as either continuous or discrete, where discrete types include integer ranges, integer sets, string sets, and real sets. The continuous aleatory distribution types include: normal (Gaussian), lognormal, uniform, loguniform, triangular, exponential, beta, gamma, gumbel, frechet, weibull, and histogram bin. The discrete aleatory distribution types include: poisson, binomial, negative binomial, geometric, hypergeometric, and discrete histograms for integers, strings, and reals. The epistemic distribution types include continuous intervals, discrete integer ranges, and discrete sets for integers, strings, and reals. While many of the epistemic types appear similar to aleatory counterparts, a key difference is that the latter requires probabilities for each value within a range or set, whereas the former will use, at most, a subjective belief specification.

When gradient and/or Hessian information is used in an uncertainty assessment, derivative components are normally computed with respect to the active continuous variables, which could be aleatory uncertain, epistemic uncertain, aleatory and epistemic uncertain, or all continuous variables, depending on the active view (see Management of Mixed Variables by Method).

Sampling Methods

Sampling techniques are selected using the sampling method

selection. This method generates sets of samples according to the

probability distributions of the uncertain variables and maps them into

corresponding sets of response functions, where the number of samples is

specified by the samples integer specification. Means, standard

deviations, coefficients of variation (COVs), and 95% confidence

intervals are computed for the response functions. Probabilities and

reliabilities may be computed for response_levels specifications,

and response levels may be computed for either probability_levels or

reliability_levels specifications.

Currently, traditional Monte Carlo (MC), Latin hypercube sampling (LHS), and

low-discrepancy sampling (LD)/quasi-Monte Carlo sampling (QMC) are supported by

Dakota and are chosen by specifying sample_type as

random,

lhs, or

low_discrepancy. In Monte Carlo sampling,

the samples are selected randomly according to the user-specified probability

distributions. Latin hypercube sampling is a stratified sampling technique for

which the range of each uncertain variable is divided into \(N_{s}\)

segments of equal probability, where \(N_{s}\) is the number of samples

requested. The relative lengths of the segments are determined by the nature of

the specified probability distribution (e.g., uniform has segments of equal

width, normal has small segments near the mean and larger segments in the

tails). For each of the uncertain variables, a sample is selected randomly from

each of these equal probability segments. These \(N_{s}\) values for each of

the individual parameters are then combined in a shuffling operation to create a

set of \(N_{s}\) parameter vectors with a specified correlation structure. A

feature of the resulting sample set is that every row and column in the

hypercube of partitions has exactly one sample. Since the total number of

samples is exactly equal to the number of partitions used for each uncertain

variable, an arbitrary number of desired samples is easily accommodated (as

compared to less flexible approaches in which the total number of samples is a

product or exponential function of the number of intervals for each variable,

i.e., many classical design of experiments methods).

Low-discrepancy or quasi-Monte Carlo sampling comes in two major flavors: lattice rules and digital nets. The well-known Sobol sequence [Sobol67] is an example of a digital net. Just as Latin hypercube samples, the points are carefully chosen such that they cover the parameter space more uniformly, in the sense that the samples exhibit low discrepancy. This discrepancy is important, because it directly appears in the bound for the integration error. In particular, if one uses \(N\) points \(\boldsymbol{t}_0, \boldsymbol{t}_1, \ldots, \boldsymbol{t}_{N-1}\) to approximate an integral \(I(f)\) as \(I_{N}(f)\), the Koksma-Hlawka inequality says that

where \(D\) is the discrepancy of the point set, and where \(V(f)\) is

the variation of the function \(f\). For a given function, it is thus

advantageous to use points for which the discrepancy is as small as possible.

Currently, Dakota supports

rank_1_lattice rules and

digital_nets.

Low-discrepancy points, and, in particular digital nets, can, under certain

assumptions, outperform LHS, in the sense that they yield faster convergence

when the points are used to approximate the mean of a model response. A good

introduction to quasi-Monte Carlo can be found in [DKS13].

Advantages of sampling-based methods include their relatively simple implementation and their independence from the scientific disciplines involved in the analysis. The main drawback of these techniques is the large number of function evaluations needed to generate converged statistics, which can render such an analysis computationally very expensive, if not intractable, for real-world engineering applications. LHS techniques, in general, require fewer samples than traditional Monte Carlo for the same accuracy in statistics, but they still can be prohibitively expensive. For further information on the method and its relationship to other sampling techniques, one is referred to the works by McKay, et al. [MBC79], Iman and Shortencarier [IS84], and Helton and Davis [HD00]. Note that under certain separability conditions associated with the function to be sampled, Latin hypercube sampling provides a more accurate estimate of the mean value than does random sampling. That is, given an equal number of samples, the LHS estimate of the mean will have less variance than the mean value obtained through random sampling. Low-discrepancy samples, and, in particular digital nets, can provide even more accurate estimates of the mean value, provided the function one tries to integrate is sufficiently smooth. Some digital net point sets may even exhibit higher-order convergence of the mean estimate.

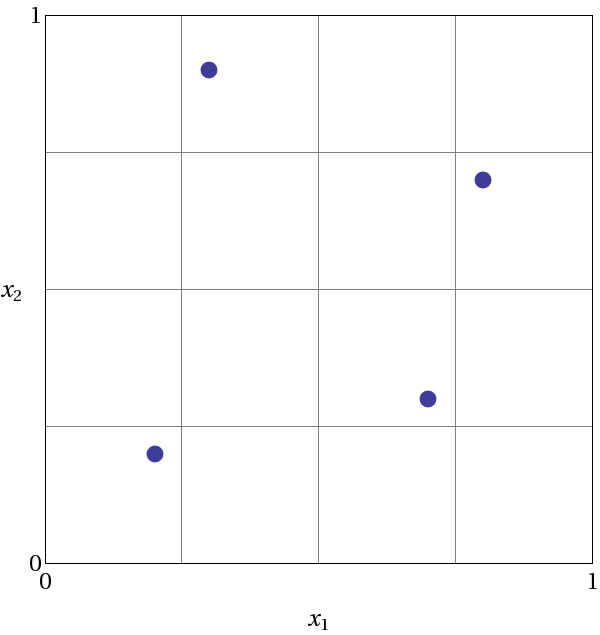

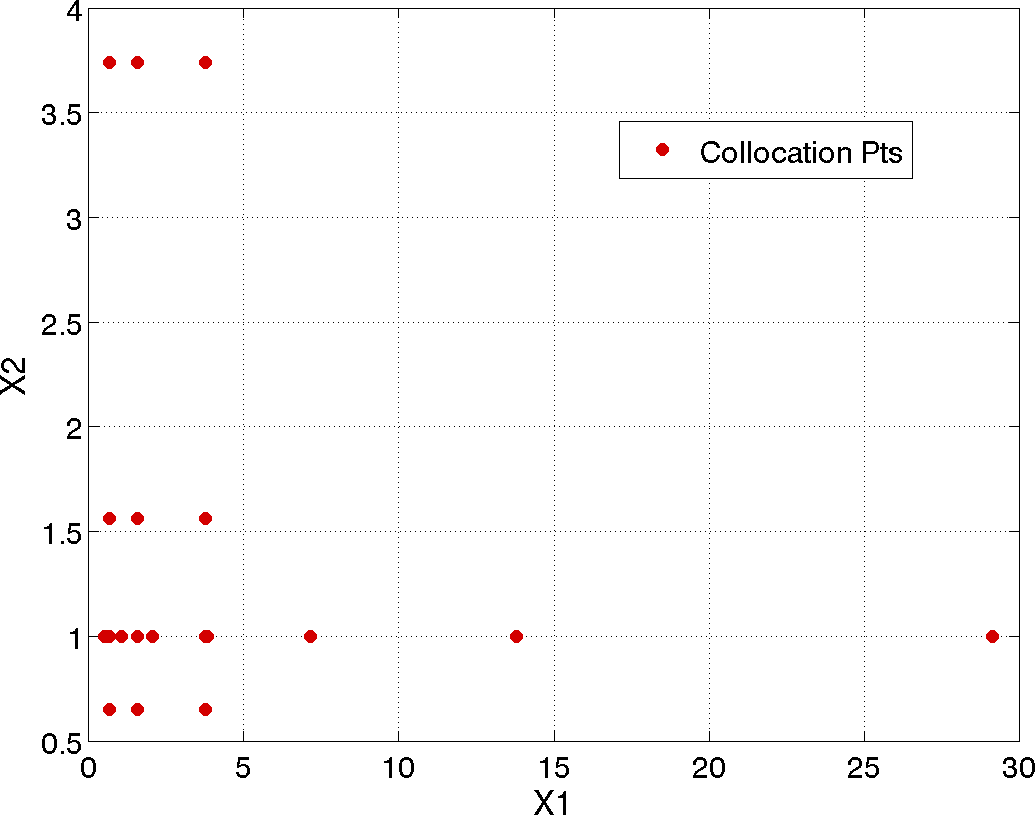

Fig. 39 demonstrates Latin hypercube sampling on a two-variable parameter space. Here, the range of both parameters, \(x_1\) and \(x_2\), is \([0,1]\). Also, for this example both \(x_1\) and \(x_2\) have uniform statistical distributions. For Latin hypercube sampling, the range of each parameter is divided into \(p\) “bins” of equal probability. For parameters with uniform distributions, this corresponds to partitions of equal size. For \(n\) design parameters, this partitioning yields a total of \(p^{n}\) bins in the parameter space. Next, \(p\) samples are randomly selected in the parameter space, with the following restrictions: (a) each sample is randomly placed inside a bin, and (b) for all one-dimensional projections of the \(p\) samples and bins, there will be one and only one sample in each bin. In a two-dimensional example such as that shown in Fig. 39, these LHS rules guarantee that only one bin can be selected in each row and column. For \(p=4\), there are four partitions in both \(x_1\) and \(x_2\). This gives a total of 16 bins, of which four will be chosen according to the criteria described above. Note that there is more than one possible arrangement of bins that meet the LHS criteria. The dots in Fig. 39 represent the four sample sites in this example, where each sample is randomly located in its bin. There is no restriction on the number of bins in the range of each parameter, however, all parameters must have the same number of bins.

Fig. 39 An example of Latin hypercube sampling with four bins in design parameters \(x_1\) and \(x_2\). The dots are the sample sites.

The actual algorithm for generating Latin hypercube samples is more complex than indicated by the description given above. For example, the Latin hypercube sampling method implemented in the LHS code [SW04] takes into account a user-specified correlation structure when selecting the sample sites. For more details on the implementation of the LHS algorithm, see Reference [SW04].

In addition to Monte Carlo, LHS and quasi-Monte Carlo design choices, Dakota sampling methods support options for incrementally-refined designs, generation of approximately determinant-optimal (D-optimal) designs, and selection of sample sizes to satisfy Wilks’ criteria.

Uncertainty Quantification Example using Sampling Methods

The input file in Listing 29 demonstrates the use of Latin hypercube Monte Carlo sampling for assessing probability of failure as measured by specified response levels. The two-variable Textbook example problem (see Textbook) will be used to demonstrate the application of sampling methods for uncertainty quantification where it is assumed that \(x_1\) and \(x_2\) are uniform uncertain variables on the interval \([0,1]\).

The number of samples to perform is controlled with the samples

specification, the type of sampling algorithm to use is controlled with

the sample_type specification, the levels used for computing

statistics on the response functions is specified with the

response_levels input, and the seed specification controls the

sequence of the pseudo-random numbers generated by the sampling

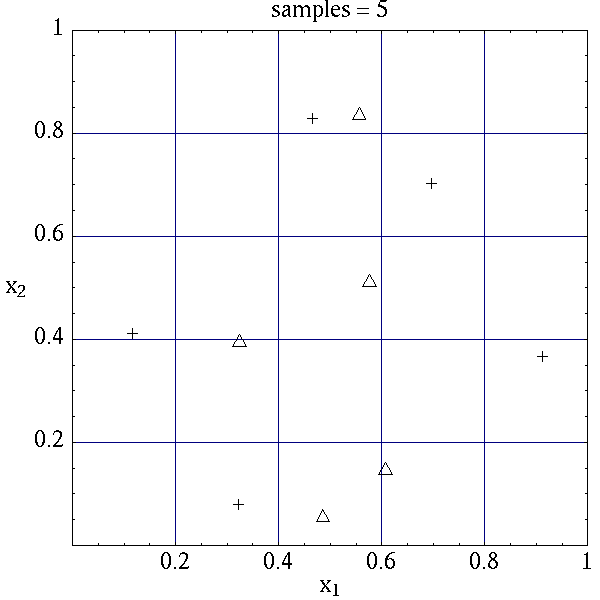

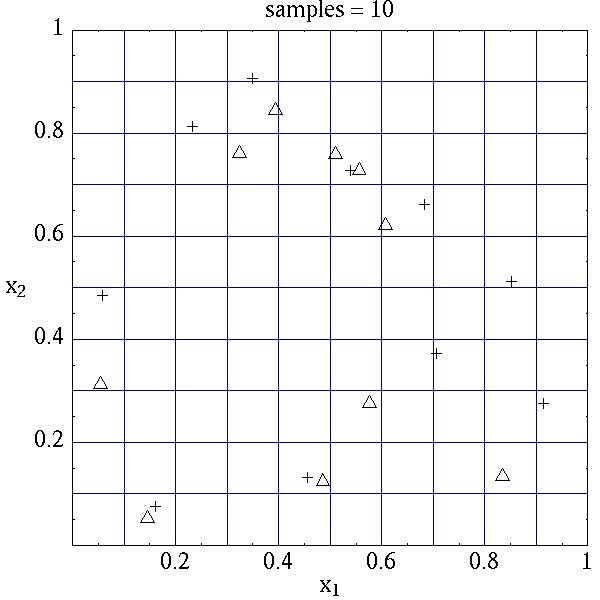

algorithms. The input samples generated are shown in

Table 7 for the case where samples =

5 and samples = 10 for both random (\(\triangle\)) and lhs

(\(+\)) sample types.

dakota/share/dakota/examples/users/textbook_uq_sampling.in# Dakota Input File: textbook_uq_sampling.in

environment

tabular_data

tabular_data_file = 'textbook_uq_sampling.dat'

top_method_pointer = 'UQ'

method

id_method = 'UQ'

sampling

sample_type lhs

samples = 10

seed = 98765

response_levels = 0.1 0.2 0.6

0.1 0.2 0.6

0.1 0.2 0.6

distribution cumulative

variables

uniform_uncertain = 2

lower_bounds = 0. 0.

upper_bounds = 1. 1.

descriptors = 'x1' 'x2'

interface

id_interface = 'I1'

analysis_drivers = 'text_book'

fork

asynchronous evaluation_concurrency = 5

responses

response_functions = 3

no_gradients

no_hessians

|

|

Latin hypercube sampling ensures full coverage of the range of the input

variables, which is often a problem with Monte Carlo sampling when the

number of samples is small. In the case of samples = 5, poor

stratification is evident in \(x_1\) as four out of the five Monte

Carlo samples are clustered in the range \(0.35 < x_1 < 0.55\), and

the regions \(x_1 < 0.3\) and \(0.6 < x_1 < 0.9\) are completely

missed. For the case where samples = 10, some clustering in the

Monte Carlo samples is again evident with 4 samples in the range

\(0.5 < x_1 < 0.55\). In both cases, the stratification with LHS is

superior.

The response function statistics returned by Dakota are shown in

Listing 30. The first block of output

specifies the response sample means, sample standard deviations, and

skewness and kurtosis. The second block of output displays confidence

intervals on the means and standard deviations of the responses. The

third block defines Probability Density Function (PDF) histograms of the

samples: the histogram bins are defined by the lower and upper values of

the bin and the corresponding density for that bin. Note that these bin

endpoints correspond to the response_levels and/or

probability_levels defined by the user in the Dakota input file. If

there are just a few levels, these histograms may be coarse. Dakota does

not do anything to optimize the bin size or spacing. Finally, the last

section of the output defines the Cumulative Distribution Function (CDF)

pairs. In this case, distribution cumulative was specified for the

response functions, and Dakota presents the probability levels

corresponding to the specified response levels (response_levels)

that were set. The default compute probabilities was used.

Alternatively, Dakota could have provided CCDF pairings, reliability

levels corresponding to prescribed response levels, or response levels

corresponding to prescribed probability or reliability levels.

Statistics based on 10 samples:

Sample moment statistics for each response function:

Mean Std Dev Skewness Kurtosis

response_fn_1 3.8383990322e-01 4.0281539886e-01 1.2404952971e+00 6.5529797327e-01

response_fn_2 7.4798705803e-02 3.4686110941e-01 4.5716015887e-01 -5.8418924529e-01

response_fn_3 7.0946176558e-02 3.4153246532e-01 5.2851897926e-01 -8.2527332042e-01

95% confidence intervals for each response function:

LowerCI_Mean UpperCI_Mean LowerCI_StdDev UpperCI_StdDev

response_fn_1 9.5683125821e-02 6.7199668063e-01 2.7707061315e-01 7.3538389383e-01

response_fn_2 -1.7333078422e-01 3.2292819583e-01 2.3858328290e-01 6.3323317325e-01

response_fn_3 -1.7337143113e-01 3.1526378424e-01 2.3491805390e-01 6.2350514636e-01

Probability Density Function (PDF) histograms for each response function:

PDF for response_fn_1:

Bin Lower Bin Upper Density Value

--------- --------- -------------

2.3066424677e-02 1.0000000000e-01 3.8994678038e+00

1.0000000000e-01 2.0000000000e-01 2.0000000000e+00

2.0000000000e-01 6.0000000000e-01 5.0000000000e-01

6.0000000000e-01 1.2250968624e+00 4.7992562123e-01

PDF for response_fn_2:

Bin Lower Bin Upper Density Value

--------- --------- -------------

-3.5261164651e-01 1.0000000000e-01 1.1046998102e+00

1.0000000000e-01 2.0000000000e-01 2.0000000000e+00

2.0000000000e-01 6.0000000000e-01 5.0000000000e-01

6.0000000000e-01 6.9844576220e-01 1.0157877573e+00

PDF for response_fn_3:

Bin Lower Bin Upper Density Value

--------- --------- -------------

-3.8118095128e-01 1.0000000000e-01 1.2469321539e+00

1.0000000000e-01 2.0000000000e-01 0.0000000000e+00

2.0000000000e-01 6.0000000000e-01 7.5000000000e-01

6.0000000000e-01 6.4526450977e-01 2.2092363423e+00

Level mappings for each response function:

Cumulative Distribution Function (CDF) for response_fn_1:

Response Level Probability Level Reliability Index General Rel Index

-------------- ----------------- ----------------- -----------------

1.0000000000e-01 3.0000000000e-01

2.0000000000e-01 5.0000000000e-01

6.0000000000e-01 7.0000000000e-01

Cumulative Distribution Function (CDF) for response_fn_2:

Response Level Probability Level Reliability Index General Rel Index

-------------- ----------------- ----------------- -----------------

1.0000000000e-01 5.0000000000e-01

2.0000000000e-01 7.0000000000e-01

6.0000000000e-01 9.0000000000e-01

Cumulative Distribution Function (CDF) for response_fn_3:

Response Level Probability Level Reliability Index General Rel Index

-------------- ----------------- ----------------- -----------------

1.0000000000e-01 6.0000000000e-01

2.0000000000e-01 6.0000000000e-01

6.0000000000e-01 9.0000000000e-01

In addition to obtaining statistical summary information of the type shown in Listing 30, the results of LHS sampling also include correlations.

Four types of correlations are returned in the output: simple and partial “raw” correlations, and simple and partial “rank” correlations. The raw correlations refer to correlations performed on the actual input and output data. Rank correlations refer to correlations performed on the ranks of the data. Ranks are obtained by replacing the actual data by the ranked values, which are obtained by ordering the data in ascending order. For example, the smallest value in a set of input samples would be given a rank 1, the next smallest value a rank 2, etc. Rank correlations are useful when some of the inputs and outputs differ greatly in magnitude: then it is easier to compare if the smallest ranked input sample is correlated with the smallest ranked output, for example.

Correlations are always calculated between two sets of sample data. One can calculate correlation coefficients between two input variables, between an input and an output variable (probably the most useful), or between two output variables. The simple correlation coefficients presented in the output tables are Pearson’s correlation coefficient, which is defined for two variables \(x\) and \(y\) as: \(\mathtt{Corr}(x,y) = \frac{\sum_{i}(x_{i}-\bar{x})(y_{i}-\bar{y})} {\sqrt{\sum_{i}(x_{i}-\bar{x})^2\sum_{i}(y_{i}-\bar{y})^2}}\). Partial correlation coefficients are similar to simple correlations, but a partial correlation coefficient between two variables measures their correlation while adjusting for the effects of the other variables. For example, say one has a problem with two inputs and one output; and the two inputs are highly correlated. Then the correlation of the second input and the output may be very low after accounting for the effect of the first input. The rank correlations in Dakota are obtained using Spearman’s rank correlation. Spearman’s rank is the same as the Pearson correlation coefficient except that it is calculated on the rank data.

Listing 31 shows an example of the

correlation output provided by Dakota for the input file in

Listing 29. Note that these correlations

are presently only available when one specifies lhs as the sampling

method under sampling. Also note that the simple and partial

correlations should be similar in most cases (in terms of values of

correlation coefficients). This is because we use a default “restricted

pairing” method in the LHS routine which forces near-zero correlation

amongst uncorrelated inputs.

Simple Correlation Matrix between input and output:

x1 x2 response_fn_1 response_fn_2 response_fn_3

x1 1.00000e+00

x2 -7.22482e-02 1.00000e+00

response_fn_1 -7.04965e-01 -6.27351e-01 1.00000e+00

response_fn_2 8.61628e-01 -5.31298e-01 -2.60486e-01 1.00000e+00

response_fn_3 -5.83075e-01 8.33989e-01 -1.23374e-01 -8.92771e-01 1.00000e+00

Partial Correlation Matrix between input and output:

response_fn_1 response_fn_2 response_fn_3

x1 -9.65994e-01 9.74285e-01 -9.49997e-01

x2 -9.58854e-01 -9.26578e-01 9.77252e-01

Simple Rank Correlation Matrix between input and output:

x1 x2 response_fn_1 response_fn_2 response_fn_3

x1 1.00000e+00

x2 -6.66667e-02 1.00000e+00

response_fn_1 -6.60606e-01 -5.27273e-01 1.00000e+00

response_fn_2 8.18182e-01 -6.00000e-01 -2.36364e-01 1.00000e+00

response_fn_3 -6.24242e-01 7.93939e-01 -5.45455e-02 -9.27273e-01 1.00000e+00

Partial Rank Correlation Matrix between input and output:

response_fn_1 response_fn_2 response_fn_3

x1 -8.20657e-01 9.74896e-01 -9.41760e-01

x2 -7.62704e-01 -9.50799e-01 9.65145e-01

Finally, note that the LHS package can be used for design of experiments

over design and state variables by including an active view override in

the variables specification section of the Dakota input file (see

Active Variables View). Then, instead

of iterating on only the uncertain variables, the LHS package will

sample over all of the active variables. In the active all view,

continuous design and continuous state variables are treated as having

uniform probability distributions within their upper and lower bounds,

discrete design and state variables are sampled uniformly from within

their sets or ranges, and any uncertain variables are sampled within

their specified probability distributions.

Incremental Sampling

In many situations, one may run an initial sample set and then need to

perform further sampling to get better estimates of the mean, variance,

and percentiles, and to obtain more comprehensive sample coverage. We

call this capability incremental sampling. Typically, a Dakota restart

file (dakota.rst) would be available from the original sample,

so only the newly

generated samples would need to be evaluated. Incremental sampling

supports continuous uncertain variables and discrete uncertain variables

such as discrete distributions (e.g. binomial, Poisson, etc.) as well as

histogram variables and uncertain set types.

There are two cases, incremental random and incremental Latin hypercube sampling, with incremental LHS being the most common. One major advantage of LHS incremental sampling is that it maintains the stratification and correlation structure of the original LHS sample. That is, if one generated two independent LHS samples and simply merged them, the calculation of the accuracy of statistical measures such as the mean and the variance would be slightly incorrect. However, in the incremental case, the full sample (double the original size) is a Latin Hypercube sample itself and statistical measures and their accuracy can be properly calculated. The incremental sampling capability is most useful when one is starting off with very small samples. Once the sample size is more than a few hundred, the benefit of incremental sampling diminishes.

Incremental random sampling: With incremental random sampling, the original sample set with \(N1\) samples must be generated using

sample_type = randomandsamples = N1. Then, the user can duplicate the Dakota input file and addrefinement_samples = N2with the number of new samples \(N2\) to be added. Random incremental sampling does not require a doubling of samples each time. Thus, the user can specify any number ofrefinement_samples(from an additional one sample to a large integer).For example, if the first sample has 50 samples, and 10 more samples are desired, the second Dakota run should specify

samples = 50,refinement_samples = 10. In this situation, only 10 new samples will be generated, and the final statistics will be reported at the end of the study both for the initial 50 samples and for the full sample of 60. The command line syntax for running the second sample isdakota -i input60.in -r dakota.50.rstwhereinput60.inis the input file with the refinement samples specification anddakota.50.rstis the restart file containing the initial 50 samples. Note that if the restart file has a different name, that is fine; the correct restart file name should be used.This process can be repeated if desired,arbitrarily extending the total sample size each time, e.g,

samples = 50,refinement_samples = 10 3 73 102.Incremental Latin hypercube sampling: With incremental LHS sampling, the original sample set with \(N1\) samples must be generated using

sample_type = lhsandsamples = N1. Then, the user can duplicate the Dakota input file and addrefinement_samples = N1. The sample size must double each time, so the first set of refinement samples must be the same size as the initial set. That is, if one starts with a very small sample size of 10, then one can use the incremental sampling capability to generate sample sizes of 20, 40, 80, etc.For example, if the first sample has 50 samples, in the second Dakota run, the number of refinement samples should be set to 50 for a total of 100. In this situation, only 50 new samples will be generated, and at the end of the study final statistics will be reported both for the initial 50 samples and for the full sample of 100. The command line syntax for running the second sample is

dakota -i input100.in -r dakota.50.rst, whereinput100.inis the input file with the incremental sampling specification anddakota.50.rstis the restart file containing the initial 50 samples. Note that if the restart file has a different name, that is fine; the correct restart file name should be used.This process can be repeated if desired, doubling the total sample size each time, e.g,

samples = 50,refinement_samples = 50 100 200 400.

Principal Component Analysis

As of Dakota 6.3, we added a capability to perform Principal Component Analysis on field response data when using LHS sampling. Principal components analysis (PCA) is a data reduction method and allows one to express an ensemble of field data with a set of principal components responsible for the spread of that data.

Dakota can calculate the principal components of the response matrix of

N samples * L responses (the field response of length L) using the

keyword principal_components. The Dakota implementation is under

active development: the PCA capability may ultimately be specified

elsewhere or used in different ways. For now, it is performed as a

post-processing analysis based on a set of Latin Hypercube samples.

If the user specifies LHS sampling with field data responses and also

specifies principal_components, Dakota will calculate the principal

components by calculating the eigenvalues and eigenvectors of a centered

data matrix. Further, if the user specifies

percent_variance_explained = 0.99, the number of components that

accounts for at least 99 percent of the variance in the responses will

be retained. The default for this percentage is 0.95. In many

applications, only a few principal components explain the majority of

the variance, resulting in significant data reduction. The principal

components are written to a file princ_comp.txt.

Dakota also uses the principal

components to create a surrogate model by representing the overall

response as weighted sum of M principal components, where the weights

will be determined by Gaussian processes which are a function of the

input uncertain variables. This reduced form then can be used for

sensitivity analysis, calibration, etc.

Wilks-based Sample Sizes

Most of the sampling methods require the user to specify the number of

samples in advance. However, if one specifies random sampling, one

can use an approach developed by Wilks:cite:p:Wilks to

determine the number of samples that ensures a particular confidence

level in a percentile of interest. The Wilks method of computing the

number of samples to execute for a random sampling study is based on

order statistics, eg considering the outputs ordered from smallest to

largest [NW04, Wil41]. Given a probability_level,

\(\alpha\), and confidence_level, \(\beta\), the Wilks

calculation determines the minimum number of samples required such that

there is \((\beta*100)\)% confidence that the

\((\alpha*100)\)%-ile of the uncertain distribution on model

output will fall below the actual \((\alpha*100)\)%-ile given by

the sample. To be more specific, if we wish to calculate the

\(95\%\) confidence limit on the \(95^{th}\) percentile, Wilks

indicates that 59 samples are needed. If we order the responses and take

the largest one, that value defines a tolerance limit on the 95th

percentile: we have a situation where \(95\%\) of the time, the

\(95^{th}\) percentile will fall at or below that sampled value.

This represents a one_sided_upper treatment applicable to the

largest output value. This treatment can be reversed to apply to the

lowest output value by using the one_sided_lower option, and further

expansion to include an interval containing both the smallest and the

largest output values in the statistical statement can be specified via

the two_sided option. Additional generalization to higher order

statistics, eg a statement applied to the N largest outputs

(one_sided_upper) or the N smallest and N largest outputs

(two_sided), can be specified using the order option along with

value N.

Double Sided Tolerance Interval Equivalent Normal Distribution

Tolerance Intervals (TIs) are a simple way to approximately account for the epistemic sampling uncertainty introduced from finite samples of a random variable. TIs are parameterized by two user-prescribed levels: one for the desired “coverage” proportion of a distribution and one for the desired degree of statistical “confidence” in covering or bounding at least that proportion. For instance, a \(95\%\) coverage / \(90\%\) confidence TI (\(95\%\)/\(90\%\) TI, \(95\)/\(90\) TI, or \(0.95\)/\(0.90\) TI) prescribes lower and upper values of a range said to have at least \(90\%\) odds that it covers or spans \(95\%\) of the “true” probability distribution from which the random samples were drawn, if they were drawn from a Normal distribution [JR20].

Reliability Methods

Reliability methods provide an alternative approach to uncertainty quantification which can be less computationally demanding than sampling techniques. Reliability methods for uncertainty quantification are based on probabilistic approaches that compute approximate response function distribution statistics based on specified uncertain variable distributions. These response statistics include response mean, response standard deviation, and cumulative or complementary cumulative distribution functions (CDF/CCDF). These methods are often more efficient at computing statistics in the tails of the response distributions (events with low probability) than sampling based approaches since the number of samples required to resolve a low probability can be prohibitive.

The methods all answer the fundamental question: “Given a set of uncertain input variables, \(\mathbf{X}\), and a scalar response function, \(g\), what is the probability that the response function is below or above a certain level, \(\bar{z}\)?” The former can be written as \(P[g(\mathbf{X}) \le \bar{z}] = \mathit{F}_{g}(\bar{z})\) where \(\mathit{F}_{g}(\bar{z})\) is the cumulative distribution function (CDF) of the uncertain response \(g(\mathbf{X})\) over a set of response levels. The latter can be written as \(P[g(\mathbf{X}) > \bar{z}]\) and defines the complementary cumulative distribution function (CCDF).

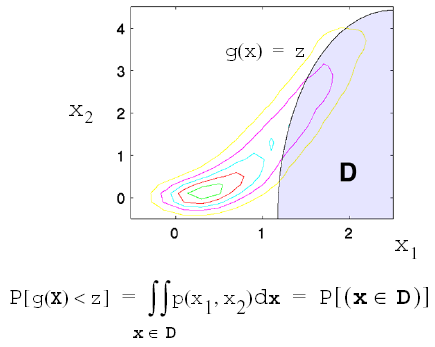

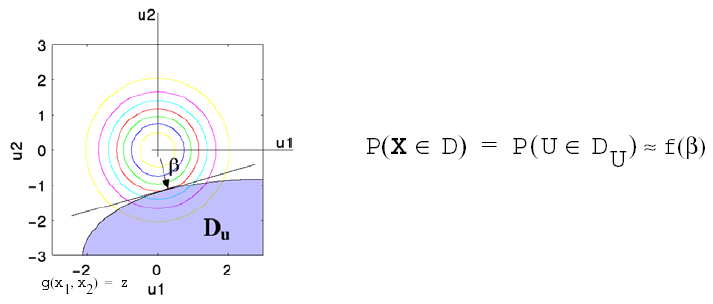

This probability calculation involves a multi-dimensional integral over an irregularly shaped domain of interest, \(\mathbf{D}\), where \(g(\mathbf{X}) < z\) as displayed in Fig. 40 for the case of two variables. The reliability methods all involve the transformation of the user-specified uncertain variables, \(\mathbf{X}\), with probability density function, \(p(x_1,x_2)\), which can be non-normal and correlated, to a space of independent Gaussian random variables, \(\mathbf{u}\), possessing a mean value of zero and unit variance (i.e., standard normal variables). The region of interest, \(\mathbf{D}\), is also mapped to the transformed space to yield, \(\mathbf{D_{u}}\) , where \(g(\mathbf{U}) < z\) as shown in Fig. 41. The Nataf transformation [DKL86], which is identical to the Rosenblatt transformation [Ros52] in the case of independent random variables, is used in Dakota to accomplish this mapping. This transformation is performed to make the probability calculation more tractable. In the transformed space, probability contours are circular in nature as shown in Fig. 41 unlike in the original uncertain variable space, Fig. 40. Also, the multi-dimensional integrals can be approximated by simple functions of a single parameter, \(\beta\), called the reliability index. \(\beta\) is the minimum Euclidean distance from the origin in the transformed space to the response surface. This point is also known as the most probable point (MPP) of failure. Note, however, the methodology is equally applicable for generic functions, not simply those corresponding to failure criteria; this nomenclature is due to the origin of these methods within the disciplines of structural safety and reliability. Note that there are local and global reliability methods. The majority of the methods available are local, meaning that a local optimization formulation is used to locate one MPP. In contrast, global methods can find multiple MPPs if they exist.

Fig. 40 Graphical depiction of calculation of cumulative distribution function in the original uncertain variable space.

Fig. 41 Graphical depiction of integration for the calculation of cumulative distribution function in the transformed uncertain variable space.

Local Reliability Methods

The main section on Local Reliability Methods provides the algorithmic details for the local reliability methods, including the Mean Value method and the family of most probable point (MPP) search methods.

Method mapping

Given settings for limit state approximation, approximation order, integration approach, and other details presented to this point, it is evident that the number of algorithmic combinations is high. Table 8 provides a succinct mapping for some of these combinations to common method names from the reliability literature, where bold font indicates the most well-known combinations and regular font indicates other supported combinations.

Order of approximation and integration |

||

|---|---|---|

MPP search |

First order |

Second order |

none |

MVFOSM |

MVSOSM |

x_taylor_mean |

AMV |

AMV\(^2\) |

u_taylor_mean |

u-space AMV |

u-space AMV\(^2\) |

x_taylor_mpp |

AMV+ |

AMV\(^2\)+ |

u_taylor_mpp |

u-space AMV+ |

u-space AMV\(^2\)+ |

x_two_point |

TANA |

|

u_two_point |

u-space TANA |

|

no_approx |

FORM |

SORM |

Within the Dakota specification (refer to local_reliability),

the MPP search and integration order

selections are explicit in the method specification, but the order of

the approximation is inferred from the associated response specification

(as is done with local taylor series approximations described in

Taylor Series). Thus,

reliability methods do not have to be synchronized in approximation and

integration order as shown in the table; however, it is often desirable

to do so.

Global Reliability Methods

Global reliability methods are designed to handle nonsmooth and multimodal failure surfaces, by creating global approximations based on Gaussian process models. They accurately resolve a particular contour of a response function and then estimate probabilities using multimodal adaptive importance sampling.

The global reliability method in Dakota is called Efficient Global Reliability Analysis (EGRA) [BES+08]. The name is due to its roots in efficient global optimization (EGO) [HANZ06, JSW98]. The main idea in EGO-type optimization methods is that a global approximation is made of the underlying function. This approximation, which is a Gaussian process model, is used to guide the search by finding points which maximize the expected improvement function (EIF). The EIF is used to select the location at which a new training point should be added to the Gaussian process model by maximizing the amount of improvement in the objective function that can be expected by adding that point. A point could be expected to produce an improvement in the objective function if its predicted value is better than the current best solution, or if the uncertainty in its prediction is such that the probability of it producing a better solution is high. Because the uncertainty is higher in regions of the design space with fewer observations, this provides a balance between exploiting areas of the design space that predict good solutions, and exploring areas where more information is needed.

The general procedure of these EGO-type methods is:

Build an initial Gaussian process model of the objective function.

Find the point that maximizes the EIF. If the EIF value at this point is sufficiently small, stop.

Evaluate the objective function at the point where the EIF is maximized. Update the Gaussian process model using this new point. Go to Step 2.

Gaussian process (GP) models are used because they provide not just a predicted value at an unsampled point, but also an estimate of the prediction variance. This variance gives an indication of the uncertainty in the GP model, which results from the construction of the covariance function. This function is based on the idea that when input points are near one another, the correlation between their corresponding outputs will be high. As a result, the uncertainty associated with the model’s predictions will be small for input points which are near the points used to train the model, and will increase as one moves further from the training points.

The expected improvement function is used in EGO algorithms to select the location at which a new training point should be added. The EIF is defined as the expectation that any point in the search space will provide a better solution than the current best solution based on the expected values and variances predicted by the GP model. It is important to understand how the use of this EIF leads to optimal solutions. The EIF indicates how much the objective function value at a new potential location is expected to be less than the predicted value at the current best solution. Because the GP model provides a Gaussian distribution at each predicted point, expectations can be calculated. Points with good expected values and even a small variance will have a significant expectation of producing a better solution (exploitation), but so will points that have relatively poor expected values and greater variance (exploration).

The application of EGO to reliability analysis, however, is made more complicated due to the inclusion of equality constraints. In forward reliability analysis, the response function appears as a constraint rather than the objective. That is, we want to satisfy the constraint that the response equals a threshold value and is on the limit state: \(G({\bf u})\!=\!\bar{z}\). Therefore, the EIF function was modified to focus on feasibility, and instead of using an expected improvement function, we use an expected feasibility function (EFF) [BES+08]. The EFF provides an indication of how well the response is expected to satisfy the equality constraint. Points where the expected value is close to the threshold (\(\mu_G\!\approx\!\bar{z}\)) and points with a large uncertainty in the prediction will have large expected feasibility values.

The general outline of the EGRA algorithm is as follows: LHS sampling is used to generate a small number of samples from the true response function. Then, an initial Gaussian process model is constructed. Based on the EFF, the point with maximum EFF is found using the global optimizer DIRECT. The true response function is then evaluated at this new point, and this point is added to the sample set and the process of building a new GP model and maximizing the EFF is repeated until the maximum EFF is small. At this stage, the GP model is accurate in the vicinity of the limit state. The GP model is then used to calculate the probability of failure using multimodal importance sampling, which is explained below.

One method to calculate the probability of failure is to directly perform the probability integration numerically by sampling the response function. Sampling methods can be prohibitively expensive because they generally require a large number of response function evaluations. Importance sampling methods reduce this expense by focusing the samples in the important regions of the uncertain space. They do this by centering the sampling density function at the MPP rather than at the mean. This ensures the samples will lie the region of interest, thus increasing the efficiency of the sampling method. Adaptive importance sampling (AIS) further improves the efficiency by adaptively updating the sampling density function. Multimodal adaptive importance sampling [DM98] is a variation of AIS that allows for the use of multiple sampling densities making it better suited for cases where multiple sections of the limit state are highly probable.

Note that importance sampling methods require that the location of at least one MPP be known because it is used to center the initial sampling density. However, current gradient-based, local search methods used in MPP search may fail to converge or may converge to poor solutions for highly nonlinear problems, possibly making these methods inapplicable. The EGRA algorithm described above does not depend on the availability of accurate gradient information, making convergence more reliable for nonsmooth response functions. Moreover, EGRA has the ability to locate multiple failure points, which can provide multiple starting points and thus a good multimodal sampling density for the initial steps of multimodal AIS. The probability assessment using multimodal AIS thus incorporates probability of failure at multiple points.

Uncertainty Quantification Examples using Reliability Analysis

In summary, the user can choose to perform either forward (RIA) or inverse (PMA) mappings when performing a reliability analysis. With either approach, there are a variety of methods from which to choose in terms of limit state approximations (MVFOSM, MVSOSM, x-/u-space AMV, x-/u-space AMV\(^2\), x-/u-space AMV+, x-/u-space AMV\(^2\)+, x-/u-space TANA, and FORM/SORM), probability integrations (first-order or second-order), limit state Hessian selection (analytic, finite difference, BFGS, or SR1), and MPP optimization algorithm (SQP or NIP) selections.

All reliability methods output approximate values of the CDF/CCDF response-probability-reliability levels for prescribed response levels (RIA) or prescribed probability or reliability levels (PMA). In addition, mean value methods output estimates of the response means and standard deviations as well as importance factors that attribute variance among the set of uncertain variables (provided a nonzero response variance estimate).

Mean-value Reliability with Textbook

Listing 32 shows the

Dakota input file for an example problem that demonstrates the simplest

reliability method, called the mean value method (also referred to as

the Mean Value First Order Second Moment method). It is specified with

method keyword local_reliability. This method calculates the mean

and variance of the response function based on information about the

mean and variance of the inputs and gradient information at the mean of

the inputs. The mean value method is extremely cheap computationally

(only five runs were required for the textbook function), but can be

quite inaccurate, especially for nonlinear problems and/or problems with

uncertain inputs that are significantly non-normal. More detail on the

mean value method can be found in the main Local Reliability Methods section,

and more detail on reliability methods in general (including the more advanced methods)

is found in Reliability Methods.

Example output from the mean value method is displayed in Listing 33. Note that since the mean of both inputs is 1, the mean value of the output for response 1 is zero. However, the mean values of the constraints are both 0.5. The mean value results indicate that variable x1 is more important in constraint 1 while x2 is more important in constraint 2, which is the case based on Textbook. The importance factors are not available for the first response as the standard deviation is zero.

dakota/share/dakota/examples/users/textbook_uq_meanvalue.in# Dakota Input File: textbook_uq_meanvalue.in

environment

method

local_reliability

interface

analysis_drivers = 'text_book'

fork asynchronous

variables

lognormal_uncertain = 2

means = 1. 1.

std_deviations = 0.5 0.5

descriptors = 'TF1ln' 'TF2ln'

responses

response_functions = 3

numerical_gradients

method_source dakota

interval_type central

fd_gradient_step_size = 1.e-4

no_hessians

MV Statistics for response_fn_1:

Approximate Mean Response = 0.0000000000e+00

Approximate Standard Deviation of Response = 0.0000000000e+00

Importance Factors not available.

MV Statistics for response_fn_2:

Approximate Mean Response = 5.0000000000e-01

Approximate Standard Deviation of Response = 1.0307764064e+00

Importance Factor for TF1ln = 9.4117647059e-01

Importance Factor for TF2ln = 5.8823529412e-02

MV Statistics for response_fn_3:

Approximate Mean Response = 5.0000000000e-01

Approximate Standard Deviation of Response = 1.0307764064e+00

Importance Factor for TF1ln = 5.8823529412e-02

Importance Factor for TF2ln = 9.4117647059e-01

FORM Reliability with Lognormal Ratio

This example quantifies the uncertainty in the “log ratio” response function:

by computing approximate response statistics using reliability analysis to determine the response cumulative distribution function:

where \(X_1\) and \(X_2\) are identically distributed lognormal

random variables with means of 1, standard deviations of 0.5,

and correlation coefficient of 0.3.

A Dakota input file showing RIA using FORM (option 7 in limit state

approximations combined with first-order integration) is listed in

Listing 34. The user first

specifies the local_reliability method, followed by the MPP search

approach and integration order. In this example, we specify

mpp_search no_approx and utilize the default first-order integration

to select FORM. Finally, the user specifies response levels or

probability/reliability levels to determine if the problem will be

solved using an RIA approach or a PMA approach. In the example of

Listing 34, we use RIA by

specifying a range of response_levels for the problem. The resulting

output for this input is shown in

Listing 35, with probability

and reliability levels listed for each response level.

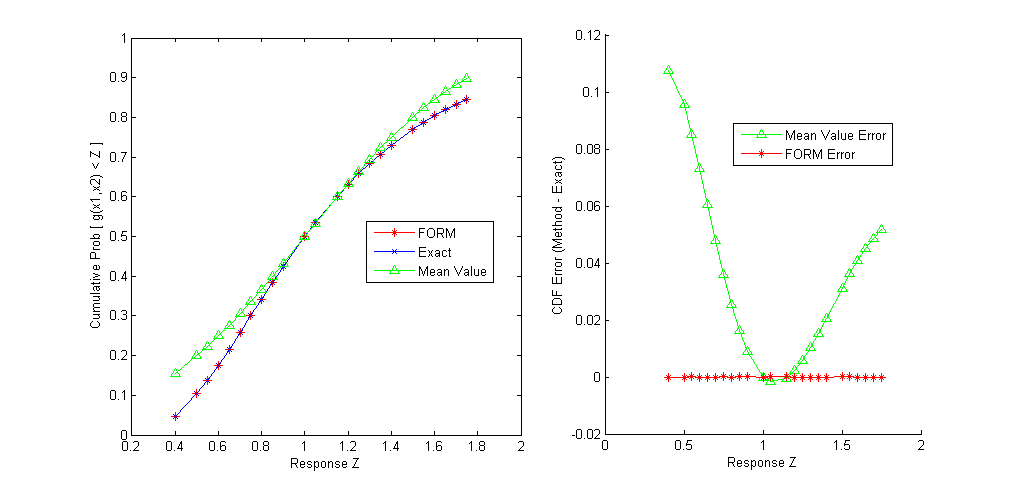

Fig. 42 shows that FORM compares favorably

to an exact analytic solution for this problem. Also note that FORM does

have some error in the calculation of CDF values for this problem, but

it is a very small error (on the order of e-11), much smaller than the

error obtained when using a Mean Value method, which will be discussed

next.

dakota/share/dakota/examples/users/logratio_uq_reliability.in# Dakota Input File: logratio_uq_reliability.in

environment

method

local_reliability

mpp_search no_approx

response_levels = .4 .5 .55 .6 .65 .7

.75 .8 .85 .9 1. 1.05 1.15 1.2 1.25 1.3

1.35 1.4 1.5 1.55 1.6 1.65 1.7 1.75

variables

lognormal_uncertain = 2

means = 1. 1

std_deviations = 0.5 0.5

initial_point = 0.6 1.4

descriptors = 'TF1ln' 'TF2ln'

uncertain_correlation_matrix = 1 0.3

0.3 1

interface

analysis_drivers = 'log_ratio'

direct

# fork asynch

responses

response_functions = 1

numerical_gradients

method_source dakota

interval_type central

fd_step_size = 1.e-4

no_hessians

Cumulative Distribution Function (CDF) for response_fn_1:

Response Level Probability Level Reliability Index

-------------- ----------------- -----------------

4.0000000000e-01 4.7624085962e-02 1.6683404020e+00

5.0000000000e-01 1.0346525475e-01 1.2620507942e+00

5.5000000000e-01 1.3818404972e-01 1.0885143628e+00

6.0000000000e-01 1.7616275822e-01 9.3008801339e-01

6.5000000000e-01 2.1641741368e-01 7.8434989943e-01

7.0000000000e-01 2.5803428381e-01 6.4941748143e-01

7.5000000000e-01 3.0020938124e-01 5.2379840558e-01

8.0000000000e-01 3.4226491013e-01 4.0628960782e-01

8.5000000000e-01 3.8365052982e-01 2.9590705956e-01

9.0000000000e-01 4.2393548232e-01 1.9183562480e-01

1.0000000000e+00 5.0000000000e-01 6.8682233460e-12

1.0500000000e+00 5.3539344228e-01 -8.8834907167e-02

1.1500000000e+00 6.0043460094e-01 -2.5447217462e-01

1.2000000000e+00 6.3004131827e-01 -3.3196278078e-01

1.2500000000e+00 6.5773508987e-01 -4.0628960782e-01

1.3000000000e+00 6.8356844630e-01 -4.7770089473e-01

1.3500000000e+00 7.0761025532e-01 -5.4641676380e-01

1.4000000000e+00 7.2994058691e-01 -6.1263331274e-01

1.5000000000e+00 7.6981945355e-01 -7.3825238860e-01

1.5500000000e+00 7.8755158269e-01 -7.9795460350e-01

1.6000000000e+00 8.0393505584e-01 -8.5576118635e-01

1.6500000000e+00 8.1906005158e-01 -9.1178881995e-01

1.7000000000e+00 8.3301386860e-01 -9.6614373461e-01

1.7500000000e+00 8.4588021938e-01 -1.0189229206e+00

Fig. 42 Comparison of the cumulative distribution function (CDF) computed by FORM, the Mean Value method, and the exact CDF for \(g(x_1,x_2)=\frac{x_1}{x_2}\)

If the user specifies local_reliability as a method with no

additional specification on how to do the MPP search (for example, by

commenting out mpp_search no_approx in

Listing 34), then no MPP search

is done: the Mean Value method is used. The mean value results are shown

in Listing 36 and consist of

approximate mean and standard deviation of the response, the importance

factors for each uncertain variable, and approximate

probability/reliability levels for the prescribed response levels that

have been inferred from the approximate mean and standard deviation (see

the Mean Value section of the main Reliability Methods page). It is evident that the statistics

are considerably different from the fully converged FORM results;

however, these rough approximations are also much less expensive to

calculate. The importance factors are a measure of the sensitivity of

the response function(s) to the uncertain input variables. A comparison

of the mean value results with the FORM results is shown in

Fig. 42. The mean value results are not

accurate near the tail values of the CDF, and can differ from the exact

solution by as much as 0.11 in CDF estimates. A comprehensive comparison

of various reliability methods applied to the logratio problem is

provided in [EB06].

MV Statistics for response_fn_1:

Approximate Mean Response = 1.0000000000e+00

Approximate Standard Deviation of Response = 5.9160798127e-01

Importance Factor for TF1ln = 7.1428570714e-01

Importance Factor for TF2ln = 7.1428572143e-01

Importance Factor for TF1ln TF2ln = -4.2857142857e-01

Cumulative Distribution Function (CDF) for response_fn_1:

Response Level Probability Level Reliability Index General Rel Index

-------------- ----------------- ----------------- -----------------

4.0000000000e-01 1.5524721837e-01 1.0141851006e+00 1.0141851006e+00

5.0000000000e-01 1.9901236093e-01 8.4515425050e-01 8.4515425050e-01

5.5000000000e-01 2.2343641149e-01 7.6063882545e-01 7.6063882545e-01

6.0000000000e-01 2.4948115037e-01 6.7612340040e-01 6.7612340040e-01

6.5000000000e-01 2.7705656603e-01 5.9160797535e-01 5.9160797535e-01

7.0000000000e-01 3.0604494093e-01 5.0709255030e-01 5.0709255030e-01

7.5000000000e-01 3.3630190949e-01 4.2257712525e-01 4.2257712525e-01

8.0000000000e-01 3.6765834596e-01 3.3806170020e-01 3.3806170020e-01

8.5000000000e-01 3.9992305332e-01 2.5354627515e-01 2.5354627515e-01

9.0000000000e-01 4.3288618783e-01 1.6903085010e-01 1.6903085010e-01

1.0000000000e+00 5.0000000000e-01 0.0000000000e+00 0.0000000000e+00

1.0500000000e+00 5.3367668035e-01 -8.4515425050e-02 -8.4515425050e-02

1.1500000000e+00 6.0007694668e-01 -2.5354627515e-01 -2.5354627515e-01

1.2000000000e+00 6.3234165404e-01 -3.3806170020e-01 -3.3806170020e-01

1.2500000000e+00 6.6369809051e-01 -4.2257712525e-01 -4.2257712525e-01

1.3000000000e+00 6.9395505907e-01 -5.0709255030e-01 -5.0709255030e-01

1.3500000000e+00 7.2294343397e-01 -5.9160797535e-01 -5.9160797535e-01

1.4000000000e+00 7.5051884963e-01 -6.7612340040e-01 -6.7612340040e-01

1.5000000000e+00 8.0098763907e-01 -8.4515425050e-01 -8.4515425050e-01

1.5500000000e+00 8.2372893005e-01 -9.2966967555e-01 -9.2966967555e-01

1.6000000000e+00 8.4475278163e-01 -1.0141851006e+00 -1.0141851006e+00

1.6500000000e+00 8.6405064339e-01 -1.0987005257e+00 -1.0987005257e+00

1.7000000000e+00 8.8163821351e-01 -1.1832159507e+00 -1.1832159507e+00

1.7500000000e+00 8.9755305196e-01 -1.2677313758e+00 -1.2677313758e+00

Additional reliability analysis and design results are provided in Sections Log Ratio through Steel Column.

Stochastic Expansion Methods

The development of these techniques mirrors that of deterministic finite element analysis through the utilization of the concepts of projection, orthogonality, and weak convergence. The polynomial chaos expansion is based on a multidimensional orthogonal polynomial approximation and the stochastic collocation approach is based on a multidimensional interpolation polynomial approximation, both formed in terms of standardized random variables. A distinguishing feature of these two methodologies is that the final solution is expressed as a functional mapping, and not merely as a set of statistics as is the case for many other methodologies (sampling, reliability, et al.). This makes these techniques particularly attractive for use in multi-physics applications which link different analysis packages. The first stochastic expansion method is the polynomial chaos expansion (PCE) [GRH99, GS91]. For smooth functions (i.e., analytic, infinitely-differentiable) in \(L^2\) (i.e., possessing finite variance), exponential convergence rates can be obtained under order refinement for integrated statistical quantities of interest such as mean, variance, and probability. Dakota implements the generalized PCE approach using the Wiener-Askey scheme [XK02], in which Hermite, Legendre, Laguerre, Jacobi, and generalized Laguerre orthogonal polynomials are used for modeling the effect of continuous random variables described by normal, uniform, exponential, beta, and gamma probability distributions, respectively 1. These orthogonal polynomial selections are optimal for these distribution types since the inner product weighting function corresponds 2 to the probability density functions for these continuous distributions. Orthogonal polynomials can be computed for any positive weight function, so these five classical orthogonal polynomials may be augmented with numerically-generated polynomials for other probability distributions (e.g., for lognormal, extreme value, and histogram distributions). When independent standard random variables are used (or computed through transformation), the variable expansions are uncoupled, allowing the polynomial orthogonality properties to be applied on a per-dimension basis. This allows one to mix and match the polynomial basis used for each variable without interference with the spectral projection scheme for the response.

In non-intrusive PCE, simulations are used as black boxes and the

calculation of chaos expansion coefficients for response metrics of

interest is based on a set of simulation response evaluations. To

calculate these response PCE coefficients, two classes of approaches are

available: spectral projection and regression. The spectral projection

approach projects the response against each basis function using inner

products and employs the polynomial orthogonality properties to extract

each coefficient. Each inner product involves a multidimensional

integral over the support range of the weighting function, which can be

evaluated numerically using sampling, tensor-product quadrature, Smolyak

sparse grid [Smo63], or

cubature [Str71] approaches. The regression approach

finds a set of PCE coefficients which best match a set of response

values obtained from either a design of computer experiments (“point

collocation” [Wal03]) or from a randomly selected

subset of tensor Gauss points (“probabilistic

collocation” [Tat95]). Various methods can be used to

solve the resulting linear system, including least squares methods for

over-determined systems and compressed sensing methods for

under-determined systems. Details of these methods are documented in

Linear regression and the necessary specifications

needed to activate these techniques are provided in the documentation

for the polynomial_chaos keyword.

Stochastic collocation (SC) is another stochastic expansion technique for UQ that is closely related to PCE. As for PCE, exponential convergence rates can be obtained under order refinement for integrated statistical quantities of interest, provided that the response functions are smooth with finite variance. The primary distinction is that, whereas PCE estimates coefficients for known multivariate orthogonal polynomial basis functions, SC forms multivariate interpolation polynomial basis functions for known coefficients. The interpolation polynomials may be either local or global and either value-based or gradient-enhanced (four combinations: Lagrange interpolation, Hermite interpolation, piecewise linear spline, and piecewise cubic spline), and may be used within nodal or hierarchical interpolation formulations. Interpolation is performed on structured grids such as tensor-product or sparse grids. Starting from a tensor-product multidimensional interpolation polynomial in the value-based case (Lagrange or piecewise linear spline), we have the feature that the \(i^{th}\) interpolation polynomial has a value of 1 at collocation point \(i\) and a value of 0 for all other collocation points, leading to the use of expansion coefficients that are just the response values at each of the collocation points. In the gradient-enhanced case (Hermite or piecewise cubic spline), SC includes both “type 1” and “type 2” interpolation polynomials, where the former interpolate the values while producing zero gradients and the latter interpolate the gradients while producing zero values. Sparse interpolants are weighted sums of these tensor interpolants;

however, they are only interpolatory for sparse grids based on fully nested rules and will exhibit some interpolation error at the collocation points for sparse grids based on non-nested rules. A key to maximizing performance with SC is performing collocation using the Gauss points and weights from the same optimal orthogonal polynomials used in PCE. For use of standard Gauss integration rules (not nested variants such as Gauss-Patterson or Genz-Keister) within tensor-product quadrature, tensor PCE expansions and tensor SC interpolants are equivalent in that identical polynomial approximations are generated [CGI10]. Moreover, this equivalence can be extended to sparse grids based on standard Gauss rules, provided that a sparse PCE is formed based on a weighted sum of tensor expansions [CEP12].

Note

Stochastic Expansion Methods provides full algorithmic details for the PCE and SC methods.

A recent addition is functional tensor train (FTT) expansions which leverage concepts from data/image compression using products of dimensional basis “cores.” When the response admits a “low rank” representation, this means that the size of the cores required for an accurate recovery is not large and a compressed format for the expansion can be achieved based on a tensor train composition. In Dakota, the basis functions used within the core for each random dimension are univariate orthogonal polynomials, similar to PCE. Solution for the expansion coefficients is based on regression and employs a numerical solution of a regularized nonlinear least squares problem. Both the rank and polynomial order per dimension are resolution controls for the method, and cross-validation procedures are provided to automate the selection of the best settings for a given response data set. Additional FTT theory will be provided in future releases as this capability is promoted to a default part of the Dakota software configuration.

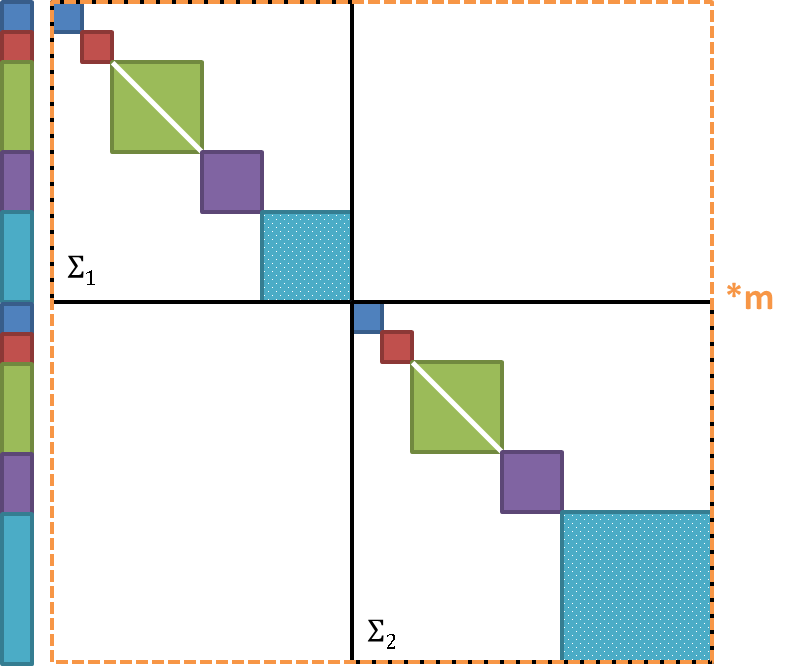

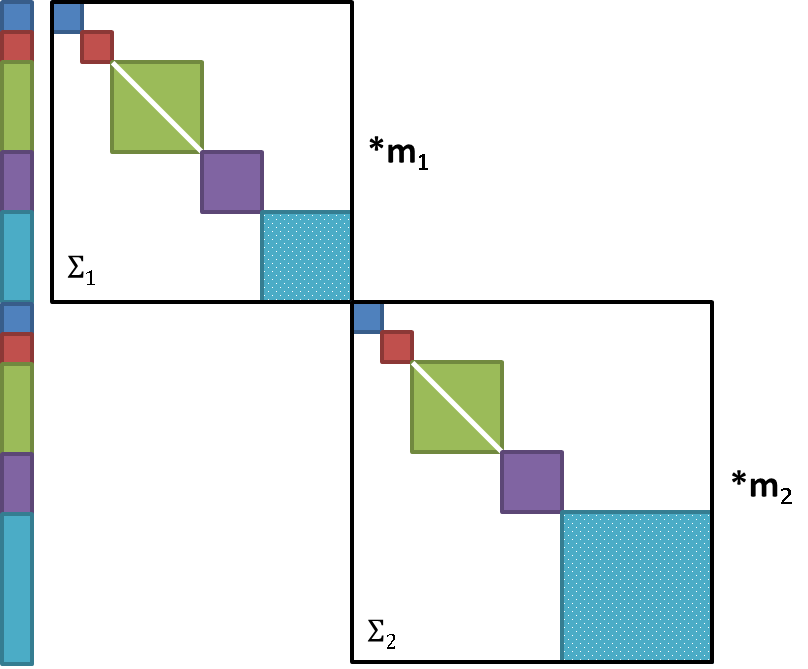

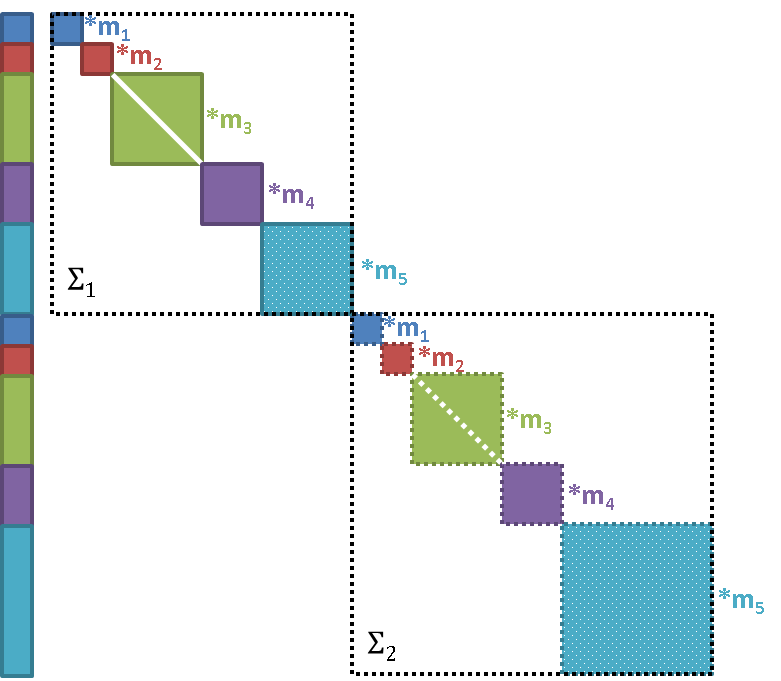

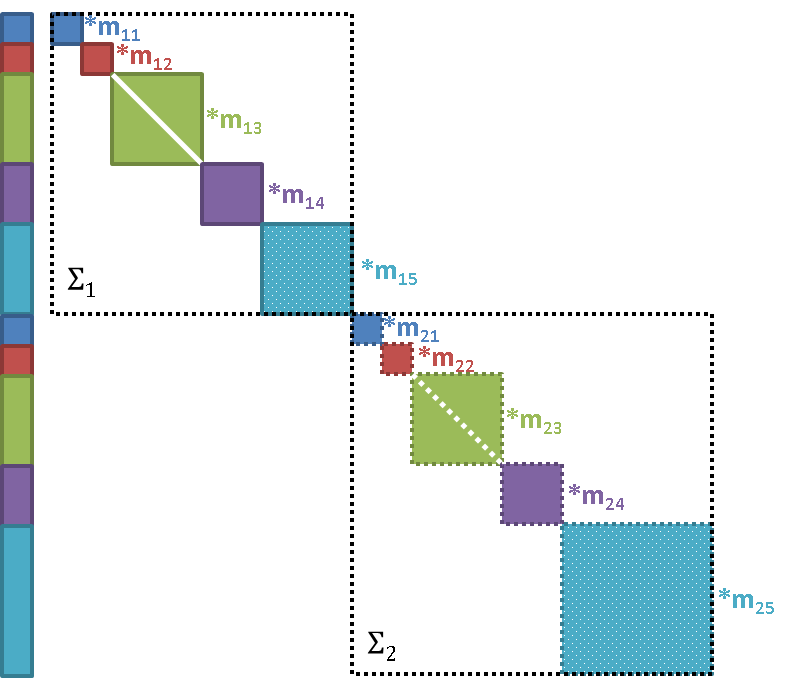

Finally, advanced multilevel and multifidelity approaches are provided

for PCE, SC, and FT (refer to

multilevel_polynomial_chaos,

multifidelity_polynomial_chaos,

multilevel_function_train,

multifidelity_function_train

and multifidelity_stoch_collocation). These approaches decompose the

input-output mapping and form multiple expansions in order to reduce

reliance on the most expensive computational models by integrating

information from low cost modeling alternatives.

Uncertainty Quantification Examples using Stochastic Expansions

Polynomial Chaos Expansion for Rosenbrock

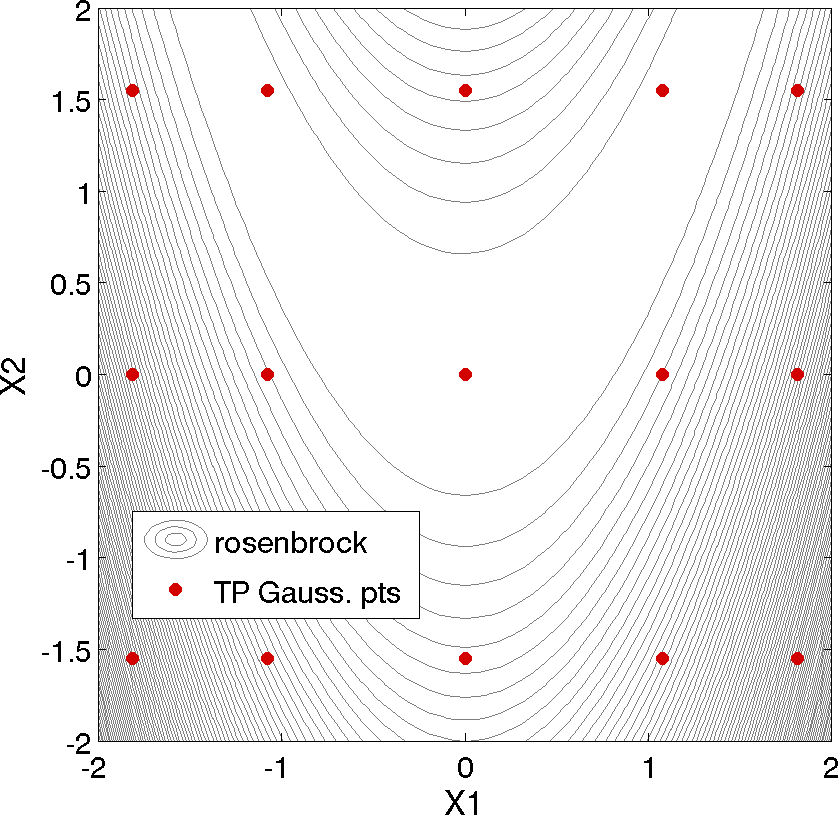

A typical Dakota input file for performing an uncertainty quantification using PCE is shown in Listing 37. In this example, we compute CDF probabilities for six response levels of Rosenbrock’s function. Since Rosenbrock is a fourth order polynomial and we employ a fourth-order expansion using an optimal basis (Legendre for uniform random variables), we can readily obtain a polynomial expansion which exactly matches the Rosenbrock function. In this example, we select Gaussian quadratures using an anisotropic approach (fifth-order quadrature in \(x_1\) and third-order quadrature in \(x_2\)), resulting in a total of 15 function evaluations to compute the PCE coefficients.

dakota/share/dakota/examples/users/rosen_uq_pce.in# Dakota Input File: rosen_uq_pce.in

environment

method

polynomial_chaos

quadrature_order = 5

dimension_preference = 5 3

samples_on_emulator = 10000

seed = 12347

response_levels = .1 1. 50. 100. 500. 1000.

variance_based_decomp #interaction_order = 1

variables

uniform_uncertain = 2

lower_bounds = -2. -2.

upper_bounds = 2. 2.

descriptors = 'x1' 'x2'

interface

analysis_drivers = 'rosenbrock'

direct

responses

response_functions = 1

no_gradients

no_hessians

The tensor product quadature points upon which the expansion is calculated are shown in Fig. 43. The tensor product generates all combinations of values from each individual dimension: it is an all-way pairing of points.

Fig. 43 Rosenbrock polynomial chaos example: tensor product quadrature points.

Once the expansion coefficients have been calculated, some statistics are available analytically and others must be evaluated numerically. For the numerical portion, the input file specifies the use of 10000 samples, which will be evaluated on the expansion to compute the CDF probabilities. In Listing 38, excerpts from the results summary are presented, where we first see a summary of the PCE coefficients which exactly reproduce Rosenbrock for a Legendre polynomial basis. The analytic statistics for mean, standard deviation, and COV are then presented. For example, the mean is 455.66 and the standard deviation is 606.56. The moments are followed by global sensitivity indices (Sobol’ indices).This example shows that variable x1 has the largest main effect (0.497) as compared with variable x2 (0.296) or the interaction between x1 and x2 (0.206). After the global sensitivity indices, the local sensitivities are presented, evaluated at the mean values. Finally, we see the numerical results for the CDF probabilities based on 10000 samples performed on the expansion. For example, the probability that the Rosenbrock function is less than 100 over these two uncertain variables is 0.342. Note that this is a very similar estimate to what was obtained using 200 Monte Carlo samples, with fewer true function evaluations.

Polynomial Chaos coefficients for response_fn_1:

coefficient u1 u2

----------- ---- ----

4.5566666667e+02 P0 P0

-4.0000000000e+00 P1 P0

9.1695238095e+02 P2 P0

-9.9475983006e-14 P3 P0

3.6571428571e+02 P4 P0

-5.3333333333e+02 P0 P1

-3.9968028887e-14 P1 P1

-1.0666666667e+03 P2 P1

-3.3573144265e-13 P3 P1

1.2829737273e-12 P4 P1

2.6666666667e+02 P0 P2

2.2648549702e-13 P1 P2

4.8849813084e-13 P2 P2

2.8754776338e-13 P3 P2

-2.8477220582e-13 P4 P2

-------------------------------------------------------------------

Statistics derived analytically from polynomial expansion:

Moment-based statistics for each response function:

Mean Std Dev Skewness Kurtosis

response_fn_1

expansion: 4.5566666667e+02 6.0656024184e+02

numerical: 4.5566666667e+02 6.0656024184e+02 1.9633285271e+00 3.3633861456e+00

Covariance among response functions:

[[ 3.6791532698e+05 ]]

Local sensitivities for each response function evaluated at uncertain variable means:

response_fn_1:

[ -2.0000000000e+00 2.4055757386e-13 ]

Global sensitivity indices for each response function:

response_fn_1 Sobol indices:

Main Total

4.9746891383e-01 7.0363551328e-01 x1

2.9636448672e-01 5.0253108617e-01 x2